This Home Robot Clears Tables and Loads the Dishwasher All by Itself

Stories

November 19, 2025

Read more on wired.com

Sunday Team

Nov 19, 2025

9 min read

We present a step-change in robotic AI: solving ultra long-horizon tasks, generalizing room-scale mobile manipulation to new environments, and advancing the dexterity frontier, all without a single trajectory of teleoperation data.

Sunday’s mission is simple: Put a robot in every home. This is an exciting future—one that returns time to people for family, friends, and the passions they love. Since day one, we have been driven by a single question: How do we accelerate this future 10× or even 100× faster?

As a full-stack company, we can identify bottlenecks across the entire system. We know that performance is always constrained by the weakest link. While many in robotics debate whether that link is hardware, compute, or funding, we believe there is only one definitive answer: data. Large Language Models (LLMs) thrive because they ingest the entire internet; robotics has no equivalent—no internet-scale corpus of real-world manipulation data.

How do we bridge this gap?

The standard approach is teleoperation: a human controls the robot remotely to teach it. While useful for demos, this creates a scaling "deadlock." You cannot deploy robots at scale without intelligence, but you cannot build intelligence without the data from large-scale deployment. Companies like Tesla benefit from millions of vehicles collecting data every day—yet even for them, it took a decade to accumulate enough to see true progress.

Our key insight is that to solve the data problem, we must solve the "embodiment mismatch problem." If we can align the robot’s form factor with the human body, any data captured from humans can be directly translated to train robots. There are 8 billion humans on Earth; leveraging their daily movements would allow us to bootstrap intelligence, break the data deadlock, and unlock large-scale deployment of robots.

However, leveraging this data is incredibly difficult. After millions of years of evolution, human hands possess a level of dexterity that is remarkably difficult to replicate. While some robotic hands look human, none have achieved true functional parity. If the robot hand doesn't match the human hand, the data doesn't transfer.

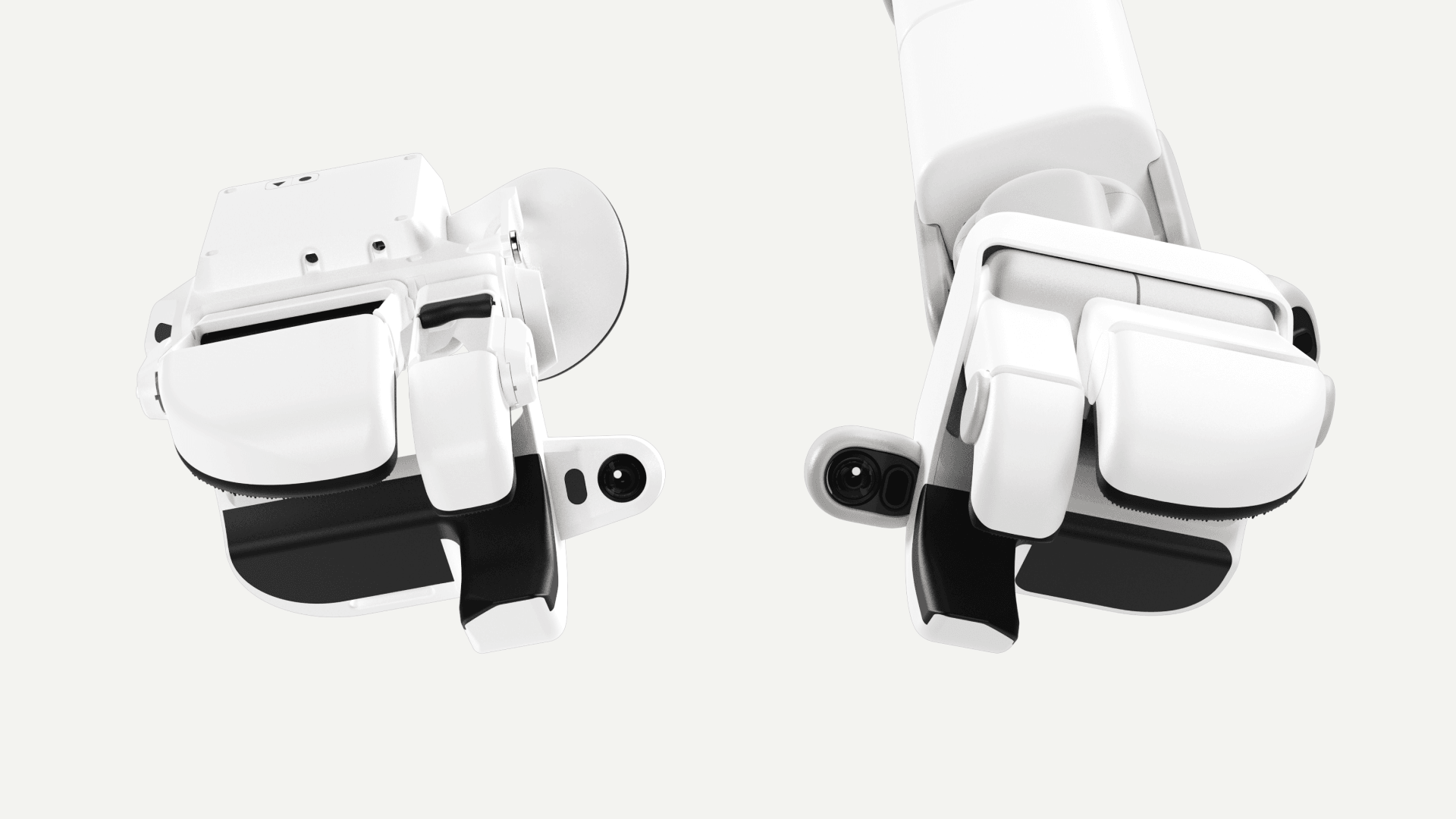

We bridge this gap by engineering the mechanical "sweet spot." We co-designed our hardware to balance the ergonomic needs of humans with the manufacturing realities of the robot—while aggressively optimizing for the aspects of hand dexterity that matter most: widening the range of objects the hand can grasp, enabling reliable tool use (sprayers, drills), and supporting everyday items like handles and strings.

The result is the Skill Capture Glove, a device that embodies this convergence. By ensuring the glove and the robot hand share the exact same geometry and sensor layout, we eliminate the translation gap entirely. The promise is simple: If a human can do it in the glove, the robot can also do it.

While the Skill Capture Glove aligns the hand interface, the rest of the embodiment remains a variable. Humans vary in height and arm length, and their physical appearance creates a significant visual domain gap—the cameras see a human arm, but the robot needs to learn from a robot arm.

To bridge these divides, we developed Skill Transform. It aligns the raw observations—both kinematic and visual—to eliminate human-specific details. This process is highly robust: We convert glove data into equivalent robot data with a 90% success rate. The result is a high-fidelity training set that looks and moves exactly as if it were generated by the robot itself.

It took us over a year to engineer the core infrastructure—the Skill Capture Glove, Skill Transform, and our robot, Memo. That investment has paid off. We have trained our first foundation model, ACT-1 (act one), and we are now moving at breakneck speed. Here is what we have accomplished in the last 90 days alone.

ACT-1 successfully executes the most complex task ever done by a robot autonomously: Table-to-Dishwasher. This task involves picking up delicate dinnerware (wine glasses, ceramic plates, metal utensils) from a dining table, loading them into a dishwasher, dumping food waste, and operating the dishwasher. Throughout this task, ACT-1 autonomously performs 33 unique and 68 total dexterous interactions with 21 different objects while navigating more than 130 ft.

Table-to-Dishwasher pushes many attributes of our system to their limits: long-horizon reasoning, dexterity, range of motion, and force sensitivity. Throughout the task, ACT-1 generates actions with highly dynamic granularities, from millimeter-level manipulation to meter-level room-scale navigation.

On the left, we show some of the most challenging interactions of the Table-to-Dishwasher task.

ACT-1 can generalize to new homes with zero environment-specific training. To demonstrate this capability, we deployed ACT-1 in a series of Airbnb locations, tasking it with clearing a dining table and loading a dishwasher. At these locations, our model successfully navigates around tables, picks up utensils, and transports plates to the dishwasher.

Since these novel locations have floor plans that ACT-1 has not seen during training, the model does not know the location of the dishwasher or the dining table out of the box. To grant our model the ability to navigate in a new home, we condition it on 3D maps of its environment during training. By exposing the model to a large, diverse dataset of home layouts, it learns to interpret these maps rather than memorize specific houses. As a result, when dropped into a new house, ACT-1 is able to utilize the given 3D map for navigating to key locations. To our knowledge, ACT-1 is the first foundation model that combines long-horizon manipulation with map-conditioned navigation in a single end-to-end model.

We present two tasks that advance the frontier of robot dexterity: folding socks and pulling an espresso shot.

We've seen many robots that can fold large articles of clothing. At Sunday, we took on the challenge of folding socks. This task requires ACT-1 to identify and extract pairs from a cluttered pile, ball them, and deposit them into a basket. A successful rollout demands a combination of delicate precision pinches with high-force stretching actions, intelligent multi-finger movements for balling up the sock, all while adapting against the countless ways in which socks can deform and self-occlude.

We have also trained ACT-1 to operate a standard consumer espresso machine. The sequence begins by picking up the portafilter and executing a mid-air tamp, an action which demands millimeter-level precision and bimanual coordination. ACT-1 then leverages Memo’s whole-body strength to insert the portafilter into the espresso machine and generate the high torque necessary to lock the portafilter in, followed by pressing the button.

ACT-1’s generalization is built on the messy reality of lived-in homes. We are constantly surprised by what we find—from cats in dishwashers to bucketloads of plums on the table. Skill Capture Gloves are the fastest, most efficient way to sample from the true distribution of how people actually live.

Over the past 90 days, we've made remarkable progress by solving robotics' fundamental data bottleneck. Data collection isn't just about operations—we've developed an innovative solution that's orders of magnitude more efficient through our full-stack approach: from our Data Capture Glove (MechE, EE) and Skill Transform (Software, ML) to data processing, and model training. The path to a robot in every home holds many more challenges, but our end-to-end thinking positions us uniquely to solve them. If you're pragmatic, deeply curious, and aligned with our mission, come build with us. Check out our open roles.

The dishes can wait.